I hate it when people think that more megapixels are better. They are wrong.

I hate it when people think that more megapixels are better. They are wrong.

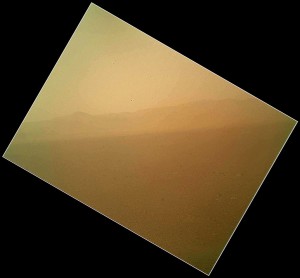

This has been bugging the shit out of me ever since the latest Mars lander touched down. Once people heard the probe had a two megapixel camera, the circle-jerk started. “HEY MAN WTF DID THEY USE THAT CAMERA MY ANDROID HAS AN 8 MEGAPIXEL NASA SUX GLGLGLGLG”

Okay, back up a few steps. Back in the old days, a camera worked by focusing light through a pinhole and onto a sheet of film, which chemically trapped that blast of light into something you could hang on a wall (after you did some developing process to the sheet involving trays of chemicals in a dark room, or dropping the shit off at Walgreen’s and waiting a week.) That pinhole then evolved into a glass lens or a series of lenses that could be used to optically process what image ended up on what paper.

Digital cameras do away with the film part by using a computer chip that’s sensitive to light, called an image sensor. That image sensor is divided up into millions of little pixels. The number of pixels determines the camera’s resolution. So if that sensor had 1024 by 1024 little square dots that reacted to light, it would be a one megapixel sensor. The sensors aren’t typically square, though; they’re usually in some rectangular format, which is why all of the pictures in your Facebook albums aren’t perfect squares. An average cell phone is going to have a sensor that has an active area of about 5.3mm by 4.0 mm. A consumer point/shoot is going to be a couple times wider and taller. Canon’s DSLRs are either APS-C (22.2×14.8mm) or APS-H (28.7x19mm). There are full format cameras that are even bigger. Obviously, the bigger a sensor, the more it weighs, costs, and uses power.

When you take the size of the image sensor and divide it up by the number of pixels, you’re going to get the size of each pixel. It’s like cutting a cake. If I take one of those big sheet cakes from Kroger and cut it into four pieces, each piece is going to have 2876 Weight Watchers points in it, and will put you into a diabetic coma. If you have to cut up the same cake for an office of six hundred people, each piece would conveniently fit in a thimble. (A 16×24″ sheet cake cut into 2″ squares feeds 96 people, unless you’re serving it in Indiana, in which case it will serve about two dozen people, provided nobody’s scooter batteries die during the meal and leave them stranded away from the cake.)

The iPhone 4S uses a 4.54 x 3.42mm sensor. Its capture size is 3264×2448, or 8 megapixels. The Curiosity uses cameras based on the Kodak KAI-2020 sensor, which is a 1600×1200 capture size on a 13.36 x 9.52 mm chip. That means the iPhone has a pixel size of 1.4 micrometers (or microns) square, and the KAI-2020 has a pixel pitch of 7.4 microns. With a cell phone camera, you’re “serving” far more people cake, but with the larger format camera, you’re starting with a much bigger cake and sharing it with far fewer people. So it “serves” nowhere near as many people, but those are some giant chunks of cake.

What does the size of the pixel mean? First, you get much more detail with a larger pixel size, because the image that’s transferred through the optics and onto the sensor is going to be captured more faithfully. It’s why your old 110 or disc film camera took such shitty pictures, and your 35mm camera didn’t; the larger a camera’s format, the more area it had to capture the image. A small pixel size also limits the dynamic range, or the amount of range between highlight and shadow. If you’re ever tried to take a picture with your cell phone when an extremely bright light was in the image, and you got a shot of a bright ball of white surrounded by darkness, it’s because your camera couldn’t handle the dynamic range between the two. And also, the smaller the pixel, the more noise that’s added to the picture, especially in low light conditions.

That doesn’t mean all high-megapixel cameras are junk, just high-megapixel cameras with small image sensors. If you go pick up a Nikon D800, it’s a 36 megapixel camera, but it’s got a 24 x 35.9 mm sensor, so it’s a 4.88 micron pixel pitch. That’s not quite the 7ish of NASA’s camera, but it’s much better than the 1.4 of an iPhone. Of course, that D800 is going to cost you three grand plus lenses, and it’s not going to fit in your pocket or make phone calls or play Angry Birds.

There are a bunch of other factors involved in the difference between the Curiosity’s cameras and the ones on your phone. First, your phone doesn’t have to deal with radiation or temperature extremes. Also, they shopped around for a camera in 2004, and then tested the living fuck out of it before putting it on a rocket for space. Your camera phone probably has a couple of tiny plastic lenses, while NASA hung much more complex optics off of their units. And their budget was slightly bigger than that of a cell phone manufacturer, so they didn’t have to pinch pennies on the sensors they used. And NASA typically takes a bunch of pictures, sends them on the slow link back to earth, then stitches them into the much larger images that you see.

It’s a shame that people are taught to judge hardware by numbers like this, and that we’re marketed hardware based on them. I remember when I worked at Samsung, a meeting erupted into a giant argument, because everyone but me and another guy believed — KNEW — that a higher megapixel camera was always better, because… it had more megapixels. It’s like when people talk about how their computer is so much better because it has a higher clock speed, without mentioning that their OS is burning way more cycles running crapware and antivirus software. The 450 horsepower in a 36,000 pound low-geared John Deere is not better than the 430 horsepower in a 3200 pound Corvette. It isn’t.